XGBoost (Extreme Gradient Boosting)

An optimized gradient boosting machine learning algorithm

About XGBoost

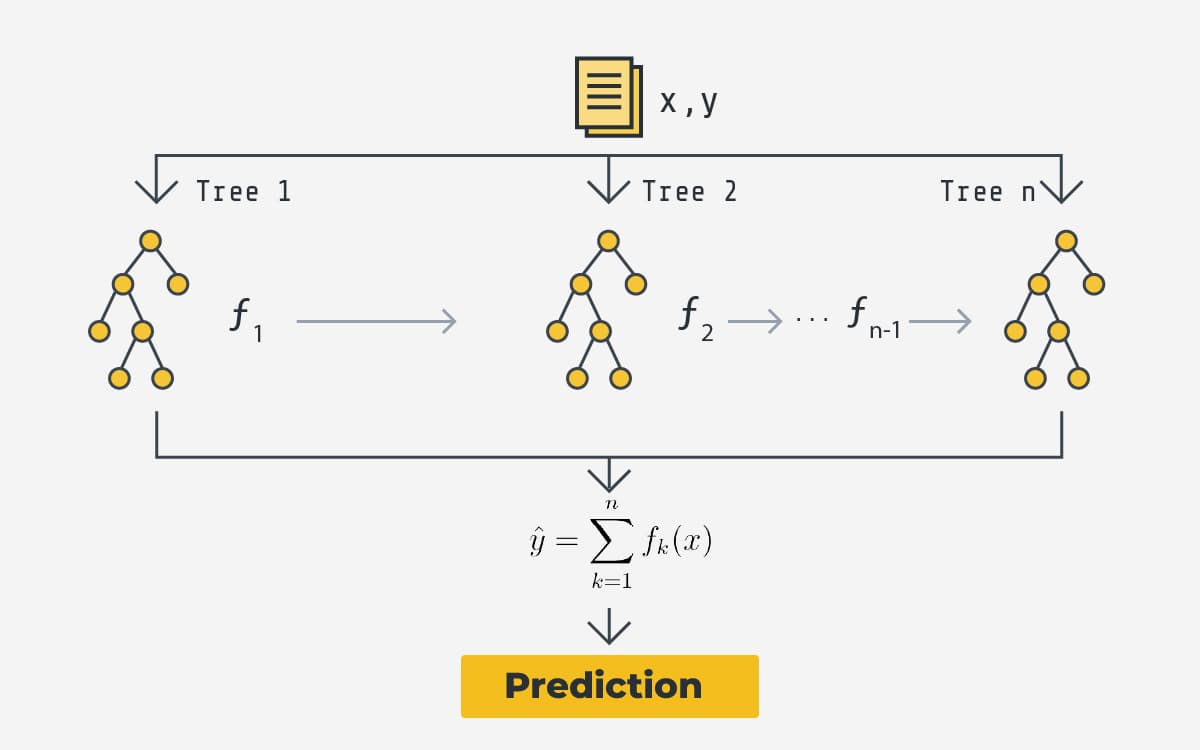

XGBoost is an advanced implementation of gradient boosted decision trees designed for speed and performance. It builds decision trees sequentially, where each new tree focuses on correcting the errors of the previous ones, combining many weak learners into a strong predictive model.

Key Features:

- Gradient Boosting: Builds trees sequentially to correct residual errors

- Regularization: Includes L1 (Lasso) and L2 (Ridge) regularization to prevent overfitting

- Parallel Processing: Optimized for efficient computation

- Handling Missing Values: Automatically learns how to handle missing data

- Tree Pruning: Grows trees depth-first and prunes backward

How XGBoost Works:

- Makes initial prediction (often the mean of target values)

- Calculates residuals (actual - predicted) for each instance

- Builds a decision tree to predict these residuals

- Updates predictions by adding the new tree's predictions (with learning rate η)

- Repeats steps 2-4 for specified number of trees

- Final prediction: ŷ = ∑(η × fₖ(x)) where fₖ are the individual trees

Mathematical Foundation

Objective Function: Obj(θ) = L(θ) + Ω(θ)

Where L is the loss function and Ω is the regularization term

Regularization: Ω(fₖ) = γT + ½λ‖w‖²

T = number of leaves, w = leaf weights

Gradient Boosting: Updates are computed using:

Fₖ(x) = Fₖ₋₁(x) + ηfₖ(x)

where η is the learning rate

Applications in Market Forecasting

XGBoost excels in financial applications due to its:

- Ability to handle mixed data types (numerical and categorical)

- Robustness to outliers and missing data

- Feature importance analysis for interpretability

- High predictive accuracy with proper tuning

- Efficiency in processing large financial datasets